3D reconstruction

Note! 3D reconstruction is currently part of the Varjo Experimental SDK. API-breaking changes may occur in future releases. We are always happy to hear how you are using or planning to use 3D reconstruction, and appreciate all user feedback.

Varjo MR Experimental API provides methods to reconstruct and query real-world geometry in real time using the Varjo XR headsets. The point cloud API is optimized for detailed rendering of real-world geometry. The meshing API is optimized for ray casting, object placement, physics, and other common interactions.

Point cloud API

Usage

Note that there was a breaking change in the experimental 3D reconstruction API between Varjo Base 3.2.2 and Varjo Base 3.3.0 releases. The information below refers to the latest specification, which is still experimental.

A client application may enable point cloud 3D reconstruction by calling varjo_MRSetReconstruction in the Varjo MR API.

The client can call varjo_MRBeginPointCloudSnapshot to schedule a snapshot, which contains the current state of the point cloud. Snapshot completion status can be polled with varjo_MRGetPointCloudSnapshotStatus. A client can also access snapshot content with varjo_MRGetPointCloudSnapshotContent, and must release the snapshot content with varjo_MRReleasePointCloudSnapshot afterwards.

The system will keep track of incremental updates (deltas) starting from the snapshot until the client calls varjo_MREndPointCloudSnapshot. The client can access the delta in front of the queue with varjo_MRGetPointCloudDelta, and must pop deltas from the queue with varjo_MRPopPointCloudDelta before reading the next one.

Points in the point cloud contain id, position, color, normal, radius, and confidence information. The radii are calculated so that neighboring points overlap, forming a dense surface. Refer to the header Varjo_types_mr_experimental.h for details on point cloud point information.

Refer to the header Varjo_mr_experimental.h for the point cloud API and more detailed usage documentation.

Supported SDKs

You can access the point cloud API through Varjo Experimental SDK

Known issues affecting the experimental release

-

Point radius is defined as the distance between a point and the most distant “connected” point. For this reason one may observe very large point radii when querying the point cloud near jump edges.

-

Point cloud colors are normalized to 6500K, and may therefore appear to have a warm tint when viewed in indoor lighting and in the absence of proper white balancing. Please refer to Creating Realistic MR or the MeshingExample for instructions on how to perform white balance normalization.

Meshing API

To allow client applications to efficiently query parts of the reconstructed geometry, the mesh is divided into a grid of fixed-size chunks. Chunk size is determined by the chunksPerMeter parameter in varjo_MeshReconstructionConfig, accessible using varjo_MRGetMeshReconstructionConfig. In the experimental release, the chunksPerMeter for point clouds is configured to 1, corresponding to a chunk size of 1x1x1m.

A client application may enable mesh 3D reconstruction by calling varjo_MRSetMeshReconstruction in the Varjo MR API.

The client can then use varjo_MRGetMeshChunkDescriptions to fetch an array of chunks which have had their geometry changed after a given timestamp. Note that in the first experimental release, all mesh chunks are always returned.

The client may then lock all or some of those chunks using varjo_MRLockMeshChunkContentsBuffer and access their contents using varjo_MRGetMeshChunkContentsBufferData. The client must call varjo_MRUnlockMeshChunkContentsBuffer to release the locks after access operations are completed.

Mesh vertices contain color and normal information as 32-bit floating point values. Refer to the header Varjo_types_mr_experimental.h for details on meshing types.

Refer to the header Varjo_mr_experimental.h for the point cloud API and more detailed usage documentation.

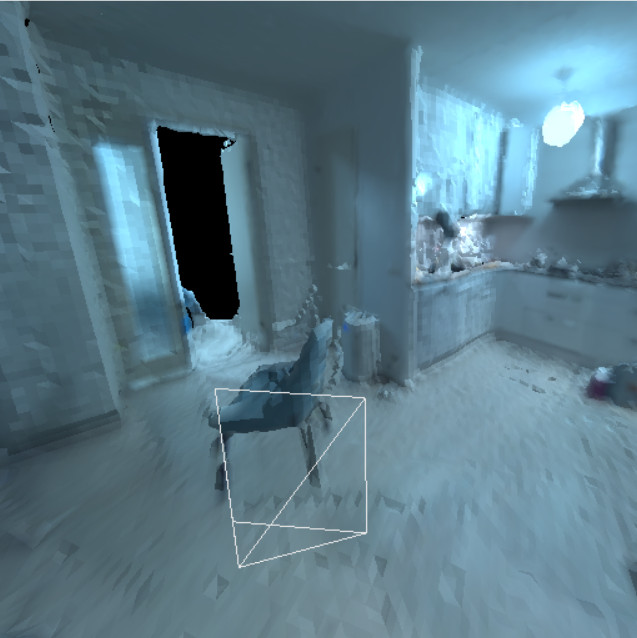

Examples

The experimental SDK contains MeshingExample, which demonstrates how to fetch, render, and export meshes reconstructed using the 3D reconstruction API. The example also demonstrates how the white balance of a mesh can be matched with that of the video pass-through cameras when using the mesh for rendering in mixed reality. Please refer to the header files inside the project for more details.

Supported SDKs

The meshing API can be accessed through Varjo Experimental SDK

Known issues affecting the experimental release

-

varjo_MRGetMeshChunkDescriptions does not yet support filtering chunks by timestamp. The updatedAfter parameter for this function only supports a value of 0, and non-zero values are ignored. Chunk timestamps are always set to 0.

-

Meshing is limited to a 8m x 8m x 8m area centered around the tracking origin. For this reason, we recommend that you perform SteamVR room setup or Varjo inside-out tracking calibration in the center of the room.

-

Mesh vertex colors are normalized to 6500K, and may therefore appear to have a warm tint when viewed in indoor lighting and in the absence of proper white balancing. Please refer to Creating Realistic MR or the MeshingExample for instructions on how to perform white balance normalization.