Rendering to Varjo headsets

Note: you are currently viewing documentation for a beta or an older version of Varjo

Rendering for Varjo HMD is very similar to rendering for other HMDs. The main difference is that to render optimal resolution images for Bionic Display, applications need to submit four views instead of two. This page gives you overview of the current Varjo rendering API — also known as Layer API — and explains the different features.

Layer API is the only way to render for Varjo devices starting from release 2.1. If you have written an application on top of the older API, referred to as Legacy API, read the migration guide on how to move over to the current rendering API.

Important: If you are not familiar with the basics of the Varjo display system, it is recommended that you familiarize yourself with them before continuing.

Introduction

In the simplest form, rendering for Varjo devices consists of the following steps:

- Enumerate views

- Create render targets (swap chains)

- Wait for the optimal time to start rendering

- Acquire target swapchain and render to it

- Release swap chain

- Submit the rendered contents as a single layer

Current supported rendering APIs are DirectX 11, DirectX 12 and OpenGL. Vulkan will be supported in the future.

Some fundamental concepts of the rendering API are described first. The second part of the document focuses on the application rendering flows.

Concepts

Varjo rendering API (Layer API) has two important concepts for frame rendering: swap chains, which represent images that can be submitted to Varjo compositor, and layers, which make up the final image out of the rendered swap chains. In addition, views are used to represent information about the display system.

Views

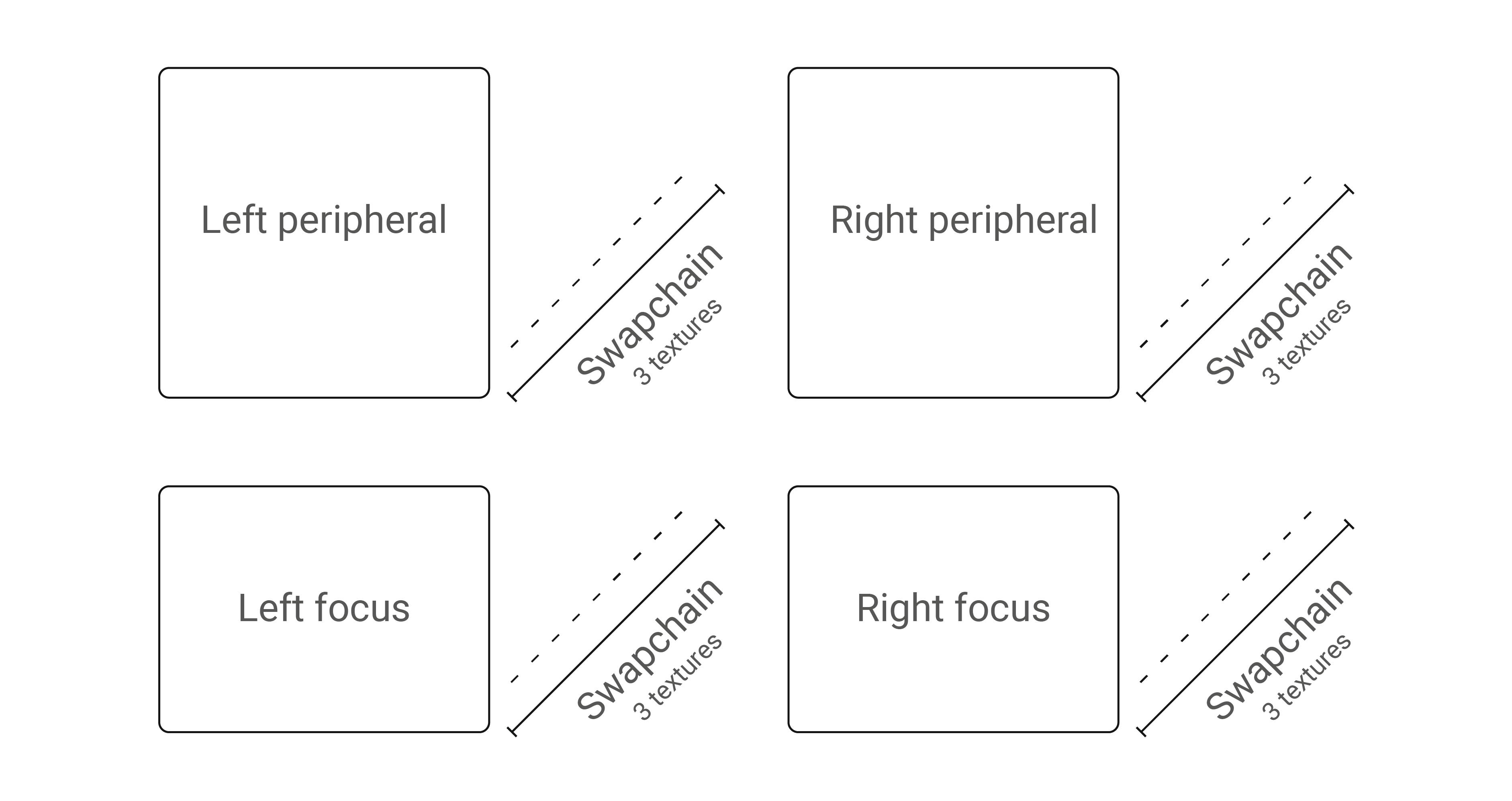

Varjo’s Bionic Display is a combination of two displays for each eye. In Varjo API, the two different displays are identified by separate varjo_DisplayType_* values, one for focus display (the sharp area) and one for context display (the peripheral area).

To enumerate native views that can be rendered, Varjo API contains the varjo_GetViewCount function to query the number of views, and varjo_GetViewDescription to query information for each view. The latter returns varjo_ViewDescription, which contains information about the eye (left or right), display (focus or context), and resolution for optimal visual quality. Important: Applications must not assume that the returned view count or data stays constant even for the same device type.

Enumerated views are always returned in the same order: [LEFT_CONTEXT, RIGHT_CONTEXT, LEFT_FOCUS, RIGHT_FOCUS], i.e. left always comes first and context display always comes first. Other Varjo APIs which accept views as parameters assume that the views are given in the same order as returned by enumeration.

The returned resolutions can be scaled by your application to trade performance for visual quality and vice versa.

Swap chains

A swap chain is an array or list of textures (not to be mixed with texture arrays) that the application can submit to Varjo Compositor. For each frame, the swap chain has an active texture in the swap chain into which the application needs to render.

Only swap chain textures can be submitted to Varjo Compositor. The rendering API does not support submission of other textures. If the application cannot render directly into a swap chain texture, the application needs to copy the contents over to the swap chain for frame submission. [Appendix C](#Appendix-C-Projection-matrix) gives some pointers on how to do this copy with DirectX 11, DirectX 12 or OpenGL.

A swap chain can be created with varjo_*CreateSwapchain(). A separate variant exists for each supported API. The important configuration is passed in varjo_SwapchainConfig2 structure:

struct varjo_SwapChainConfig2 {

varjo_TextureFormat textureFormat;

int32_t numberOfTextures;

int32_t textureWidth;

int32_t textureHeight;

int32_t textureArraySize;

};

textureFormat: The format of the swap chain. The table below documents the formats for swap chains that are always supported regardless of the graphics API.varjo_GetSupportedTextureFormats()can be used to query additional supported formats. All possible values can be found inVarjo_types.h. The most used format isvarjo_TextureFormat_R8G8B8A8_SRGBfor color swap chains.numberOfTextures: Defines how many textures will be created for the swap chain and the recommended value is3. This will allow application to render optimally and not get blocked by Varjo compositor.- The last three parameters (

textureWidth,textureHeight, andtextureArraySize) depend on the way the application wants to organize the swap chain data. This is discussed more in the next sections.

Swap chain formats that are always supported:

varjo_TextureFormat_R8G8B8A8_SRGBvarjo_DepthTextureFormat_D32_FLOATvarjo_DepthTextureFormat_D24_UNORM_S8_UINTvarjo_DepthTextureFormat_D32_FLOAT_S8_UINT

Data organization

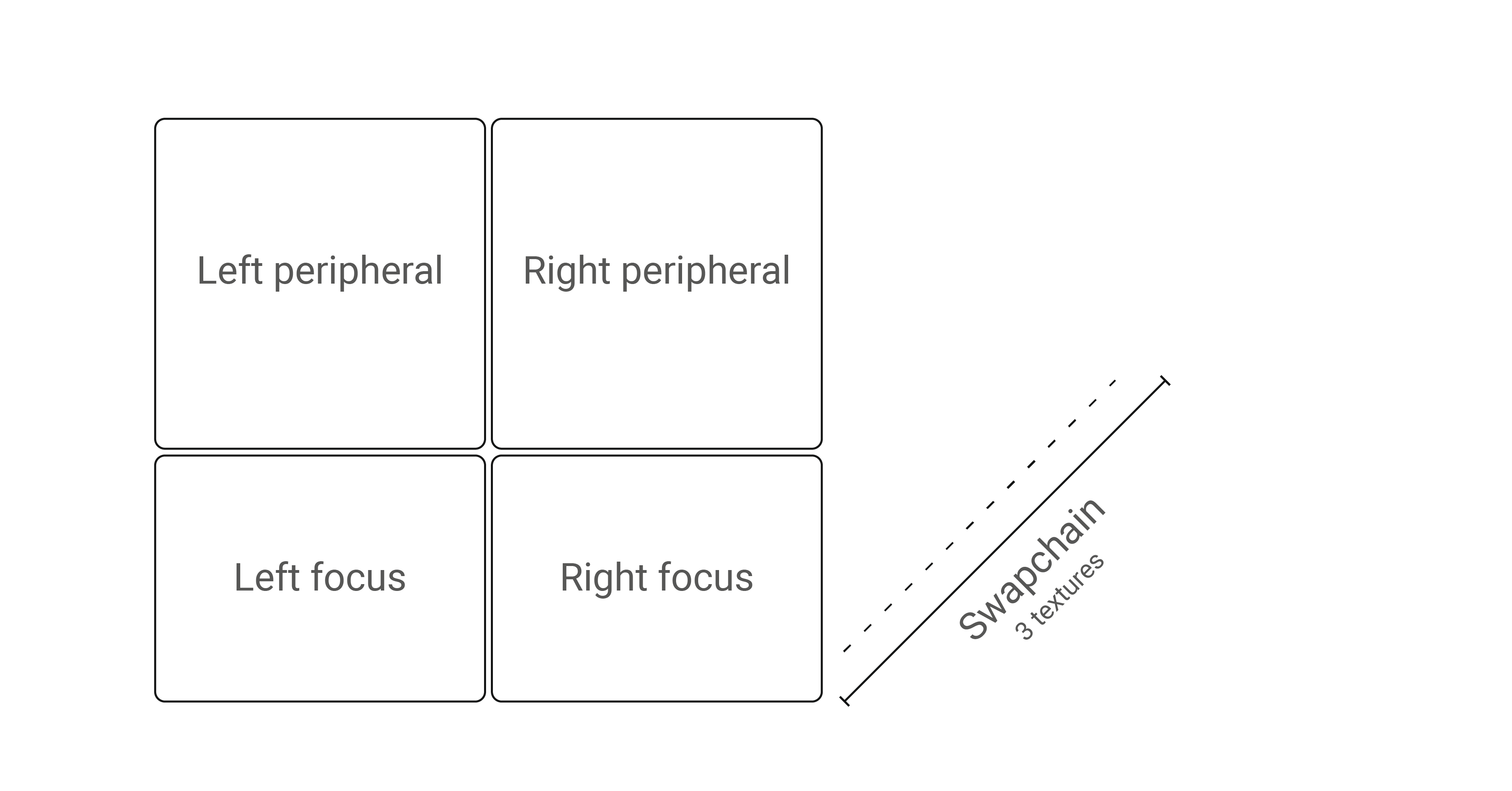

Applications are free to organize data in swap chains as they like. When submitting the rendered layers for final composition, any part of the swap chain can be picked as a data source with a viewport rectangle (and texture array index).

The simplest way is to create separate swap chains for each submitted (native) view.

Another way is to pack all views into a single swap chain (atlas). To identify each view in the atlas, varjo_SwapChainViewport must be used with correct coordinates and extents.

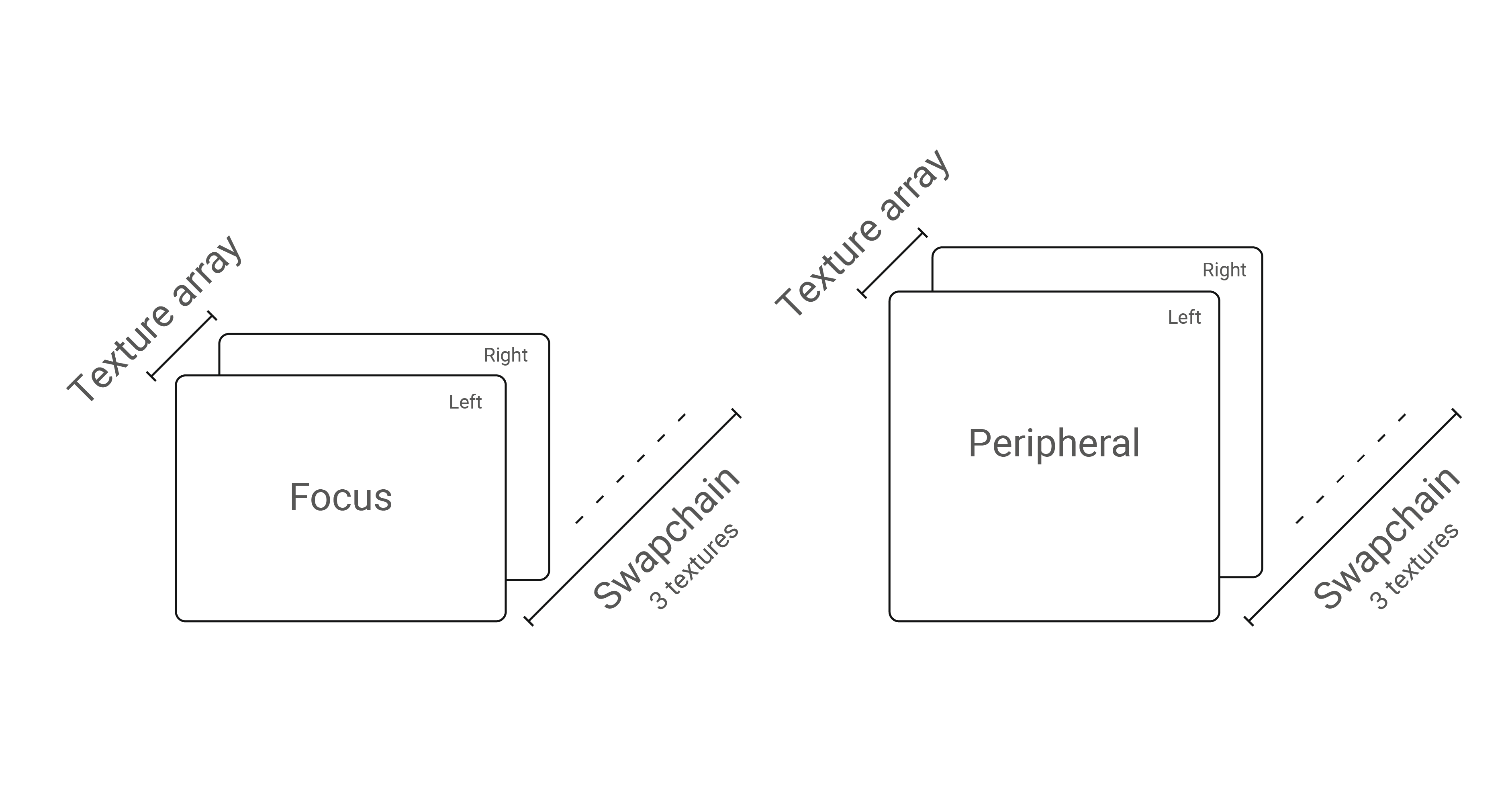

Swapchain API also supports texture arrays. If textureArraySize > 1 is passed in the swap chain configuration, each swap chain texture becomes an array of the given size. Usually value textureArraySize = 2 is used if arrays are used at all. In some cases, this enables optimizations available for rendering stereo pairs.

Layers

A layer is a data structure which provides information about a single sheet of the final image. The final shown image is composited by Varjo compositor from all the layers submitted by all active client applications, including the Varjo system apps.

Currently only one layer type is supported, but more will be added in the future.

Common functionality

To support multiple layer types in the C API, each layer struct (regardless of the type) starts with varjo_LayerHeader:

struct varjo_LayerHeader {

varjo_LayerType type;

varjo_LayerFlags flags;

};

In this structure, type identifies the layer and is always dictated by the actual layer type.

flags can be used to modify the layer behavior in the compositing phase, meaning functionality such as alpha blending. By default, the value for this is varjo_LayerFlagNone, which means that application submits a simple opaque color layer.

There are the following options:

varjo_LayerFlag_BlendMode_AlphaBlend: Enable (premultiplied) alpha blending for the layer. Applications must supply a valid alpha. Premultiplied blending means that the compositor assumes that application has already multiplied the given alpha into the color (RGB) values.varjo_LayerFlag_DepthTesting: Enable depth testing against the other layers. Layers are always rendered in the layer order. However, when this flag is given, the compositor will only render fragments for which the depth is closer to the camera than the previously rendered layers.varjo_LayerFlag_InvertAlpha: When given, the alpha channel will be inverted after sampling.varjo_LayerFlag_UsingOcclusionMesh: This must be given if the application has rendered the occlusion mesh to enable certain optimizations in the compositor. See below on how to render the occlusion mesh.

See Varjo_types_layers.h for more information.

Projection layer

The one and only provided layer type at the moment is multi-projection layer. Each layer of this type contains a number of views which are tied to a certain reference space.

Usually the number of supplied views matches the count of native views (as given by varjo_GetViewCount), but applications can also supply just two views for stereo rendering. The views are assumed to be in the following order:

- When rendering the native number of views, the same order is assumed as returned by view enumeration.

-

When rendering just two views for stereo rendering (regardless of the device), left view is assumed to be given first.

struct varjo_LayerMultiProj { struct varjo_LayerHeader header; varjo_Space space; int32_t viewCount; struct varjo_LayerMultiProjView* views; };

varjo_LayerMultiProjView describes the parameters for a single view:

struct varjo_LayerMultiProjView {

struct varjo_ViewExtension* extension;

struct varjo_Matrix projection;

struct varjo_Matrix view;

struct varjo_SwapChainViewport viewport;

};

To get optimal experience, applications should always render the number of views that varjo_GetViewCount reports and match the rendering resolution to that in-view description (given by varjo_GetViewDescription). Varjo compositor also supports submission of a stereo pair; in this case, varjo_LayerMultiProj::views should contain just two views for context.

Note: view descriptions which are returned by varjo_GetViewDescription() are in the same order as they are in varjo_FrameInfo which is returned by varjo_WaitSync().

Views can also have one or multiple extensions (via extension chaining). The available extensions are described below.

Depth buffer extension (varjo_ViewExtensionDepth)

varjo_ViewExtensionDepth allows applications to submit a depth buffer with the color buffer. Depth buffer submission automatically enables 6-DOF (positional) timewarp in the compositor. As positional timewarp can correct for movement in addition to rotation, the final rendering will appear smoother in the case of headset movement, even with low FPS. In addition to positional timewarp, depth buffer submission also allows enabling depth testing in composition, which is important especially in some mixed reality use cases. If possible, applications should always submit the depth buffer.

Each depth buffer needs a separate swap chain, similar to color buffers. The only difference in swap chain creation is the format.

struct varjo_ViewExtensionDepth {

struct varjo_ViewExtension header;

double minDepth;

double maxDepth;

double nearZ;

double farZ;

struct varjo_SwapChainViewport viewport;

};

minDepth and maxDepth define the range values that the application will render into the created depth buffer. Unless a depth viewport transformation is used, minDepth = 0 and maxDepth = 1 should be used regardless of the rendering API. Please note that the depth buffer value range is not the same as clip space range.

nearZ and farZ describe the near and far planes; if nearZ < farZ, compositor will assume that application renders forward depth (and view distance of nearZ matches with minDepth in the depth buffer). Reversed Z-buffer can be indicated with reversed nearZ and farZ values.

Velocity buffer extension (varjo_ViewExtensionVelocity)

varjo_ViewExtensionVelocity allows applications to submit a velocity buffer in addition to color and depth buffers. The velocity buffer contains per-pixel velocities in the content itself, for example in animations and object movement in general.

Motion Prediction smoothens animations and object movement, especially in low-FPS scenarios. The velocity buffer helps with prediction, since motion estimation in the compositor can use velocities specified by the application instead of estimating them.

Applications should submit the velocity buffer if possible.

The velocity buffer requires a separate swap chain similar to color and depth buffers. The format for the buffer is described below:

struct varjo_ViewExtensionVelocity {

struct varjo_ViewExtension header;

double velocityScale;

varjo_Bool includesHMDMotion;

struct varjo_SwapChainViewport viewport;

};

A scale multiplier is applied to all velocity vectors in the surface so that velocities are in pixels per second after scaling.

Velocity can be expressed either with or without head movement. The includesHMDMotion flag controls which one is used.

Velocities should be encoded as two 16-bit values into an RGBA8888 texture as follows: R = high X, G = low X, B = high Y, A = low Y.

Sample code for packing velocities:

uint4 packVelocity(float2 floatingPoint) {

int2 fixedPoint = floatingPoint * PRECISION;

uint2 temp = uint2(fixedPoint.x & 0xFFFF, fixedPoint.y & 0xFFFF);

return uint4(temp.r >> 8, temp.r & 0xFF, temp.g >> 8, temp.g & 0xFF);

}

The Benchmark example includes example code showing how to use the velocity buffer in a real application.

Depth range extension (varjo_ViewExtensionDepthTestRange)

Depth buffer submission allows applications to enable depth test. However, in some XR use cases the depth testing range needs to be limited. Inside these view-space limits composition does depth testing against the other layers; outside the limits, the layer is blended with other layers in the layer order.

As an example, depth testing range can be used for enabling hand visibility in virtual scenes. By enabling depth estimation for the video pass-through layer, applications can perform depth tests against this layer. However, as only hands should show up over the VR layer (and not the office room or objects around the user), depth testing needs to be enabled only for the short range near the user.

The extension that allows applications to limit the depth testing range is called varjo_ViewExtensionDepthTestRange.

struct varjo_ViewExtensionDepthTestRange {

struct varjo_ViewExtension header;

double nearZ;

double farZ;

};

nearZ and farZ define the range for which the depth test is active. These values are given in view-space coordinates.

std::vector<varjo_LayerMultiProjView> views(viewCount);

...

std::vector<varjo_ViewExtensionDepth> depthExt(viewCount);

...

std::vector<varjo_ViewExtensionDepthTestRange> depthTestRangeExt(viewCount);

...

for (int i = 0; i < viewCount; i++) {

views[i].extension = &depthExt[i].header; // Attach depth buffer extension

depthExt[i].header.next = &depthTestRangeExt[i].header; // Chain depth test range extension

depthTestRangeExt[i].header.type = varjo_ViewExtensionDepthTestRangeType;

// Depth test will be enabled in [0, 1m] range

depthTestRangeExt[i].nearZ = 0;

depthTestRangeExt[i].farZ = 1;

}

It is not important in which order the given extensions are chained. However, the depth test range extension will not have any effect if depth buffer is not provided.

Occlusion mesh

Due to lens distortion, some display pixels are not visible in the optical path so the application can stencil out pixels to reduce shading workload and improve performance.

Mesh can be obtained by calling varjo_CreateOcclusionMesh() and must be freed with varjo_FreeOcclusionMesh. This mesh can be used either as a stencil mask or depth mask (rendered at near plane) to reduce the pixel fill on the areas which will not be visible.

When any layer is submitted for which the occlusion mesh was used in rendering, applications should pass varjo_LayerFlag_UsingOcclusionMesh.

Rendering

Rendering flow for Varjo HMDs can be easily characterized with the following list of steps:

- Initialization

- Initialize the Varjo system with

varjo_SessionInit. - Query how many viewports need to be rendered with

varjo_GetViewCount()and setup viewports using the info returned byvarjo_GetViewDescription. - 1.3. Create as many swap chains as needed via

varjo_D3D11CreateSwapChain, varjo_D3D12CreateSwapchain`or `varjo_GLCreateSwapChain`. For format, use one that is always supported, or query support with `varjo_GetSupportedTextureFormats`. - Enumerate swapchain textures

varjo_GetSwapChainImageand create render targets. - Create frame info for per-frame data with

varjo_CreateFrameInfo.

- Initialize the Varjo system with

- Rendering (in render thread)

- Call

varjo_WaitSyncto wait for optimal time to start rendering. This will fill in thevarjo_FrameInfostructure with the latest pose data. Use the given projection matrix directly (see Appendix B) or build one from tangents given byvarjo_GetAlignedView. - Begin rendering the frame by calling

varjo_BeginFrameWithLayers. - For each viewport:

- Acquire swapchain with

varjo_AcquireSwapChainImage(). - Render your frame into the selected swap chain into texture index as given by the previous step.

- Release swapchain with

varjo_ReleaseSwapChainImage().

- Acquire swapchain with

- Submit textures with

varjo_EndFrameWithLayers. This tells Varjo Runtime that it can now draw the submitted frame.

- Call

- Shutdown

- Free the allocated

varjo_FrameInfostructure. - Shut down the session by calling

varjo_SessionShutDown().

- Free the allocated

A simple example code is shown next for these steps with additional descriptions of the functions. The examples assume that there exists a Renderer class which abstracts the actual frame rendering.

Initialization

The following example shows basic initialization. Use varjo_To<D3D11|GL>Texture to convert varjo_Texture to native types.

varjo_Session* session = varjo_SessionInit();

// Initialize rendering engine with a given luid

// To make sure that compositor and application run on the same GPU

varjo_Luid luid = varjo_D3D11GetLuid(session);

Renderer renderer{luid};

std::vector<varjo_Viewport> viewports = CalculateViewports(session);

const int viewCount = varjo_GetViewCount(session);

varjo_SwapChain* swapchain{nullptr};

varjo_SwapChainConfig2 config{};

config.numberOfTextures = 3;

config.textureHeight = GetTotalHeight(viewports);

config.textureWidth = GetTotalWidth(viewports);

config.textureFormat = varjo_TextureFormat_R8G8B8A8_SRGB; // Supported by OpenGL and DirectX

config.textureArraySize = 1;

swapchain = varjo_D3D11CreateSwapChain(session, &config);

std::vector<Renderer::Target> renderTargets(config.numberOfTextures);

for (int swapChainIndex = 0; swapChainIndex < config.numberOfTextures; swapChainIndex++) {

varjo_Texture swapChainTexture = varjo_GetSwapChainImage(swapchain, swapChainIndex);

// Create as many render targets as there are textures in a swapchain

randerTargets[i] = renderer.initLayerRenderTarget(swapChainIndex, varjo_ToD3D11Texture(swapChainTexture), config.textureWidth, config.textureHeight);

}

varjo_FrameInfo* frameInfo = varjo_CreateFrameInfo(session);

Frame setup

In this example we will submit one layer. We are preallocating vector with as many views as runtime support.

std::vector<varjo_LayerMultiProjView> views(viewCount);

for (int i = 0; i < viewCount; i++) {

const varjo_Viewport& viewport = viewports[i];

views[i].viewport = varjo_SwapChainViewport{swapchain, viewport.x, viewport.y, viewport.width, viewport.height, 0, 0};

}

varjo_LayerMultiProj projLayer{{varjo_LayerMultiProjType, 0}, 0, viewCount, views.data()};

varjo_LayerHeader* layers[] = {&projLayer.header};

varjo_SubmitInfoLayers submitInfoWithLayers{frameInfo->frameNumber, 0, 1, layers};

Rendering

The render loop starts with varjo_WaitSync() function call. This function will block until the optimal moment for the application to start rendering.

varjo_WaitSync() returns varjo_FrameInfo. This data structure contains all important information related to current frame and pose. varjo_FrameInfo has three fields:

views: Array ofvarjo_ViewInfo, as many as the count returned byvarjo_GetViewCount(). Views are in the same order as when enumerated withvarjo_GetViewDescription.displayTime: Predicted time when the rendered frame will be shown in HMD in nanoseconds.frameNumber: Number of the current frame. Monotonously increasing.

For each view, varjo_ViewInfo contains the view and projection matrixes and whether the view should be rendered or not.

viewMatrix: World-to-eye matrix (column major)-

projectionMatrix: Projection matrix (column major). Applications need to patch the depth transform for the matrix usingvarjo_UpdateNearFarPlanes. Applications should not assume that this matrix is constant.while (!quitRequested) { // Waits for the best moment to start rendering next frame // (can be executed in another thread) varjo_WaitSync(session, frameInfo); // Indicates to the compositor that application is about to // start real rendering work for the frame (has to be executed in the render thread) varjo_BeginFrameWithLayers(session); int swapChainIndex; // Locks swap chain image and gets the current texture index // within the swap chain the application should render to varjo_AcquireSwapChainImage(swapchain, &swapChainIndex); renderTargets[swapChainIndex].clear(); float time = (frameInfo->displayTime - startTime) / 1000000000.0f; for (int i = 0; i < viewCount; i++) { varjo_Viewport viewport = viewports[i]; // Update depth transform to match the desired one varjo_UpdateNearFarPlanes(frameInfo->views[i].projectionMatrix, varjo_ClipRangeZeroToOne, 0.01, 300); renderTargets[i].renderView(viewport.x, viewport.y, viewport.width, viewport.height, frameInfo->views[i].projectionMatrix, frameInfo->views[i].viewMatrix, time); } // Unlocks swapchain image varjo_ReleaseSwapChainImage(swapchain); }

Frame submission

varjo_SubmitInfoLayers describes the list of layers which will be submitted to compositor.

frameNumber: Fill with the frame number given byvarjo_WaitSync.layerCount: How many layers there are in the layer array.-

layers: Pointer to the array of layers to be submitted.... // Copy view and projection matrixes for (int i = 0; i < viewCount; i++) { const varjo_ViewInfo viewInfo = frameInfo->views[i]; std::copy(viewInfo.projectionMatrix, viewInfo.projectionMatrix + 16, views[i].projection.value); std::copy(viewInfo.viewMatrix, viewInfo.viewMatrix + 16, views[i].view.value); } submitInfoWithLayers.frameNumber = frameInfo->frameNumber; varjo_EndFrameWithLayers(session, &submitInfoWithLayers);

Adding depth

Layer API adds new functionality for submitting depth buffers as compared to the earlier rendering API. See above for description of the depth extension.

Creating depth swapchain

Depth swapchains are created similarly as color swapchains:

varjo_SwapChainConfig2 config2{};

config2.numberOfTextures = 3;

config2.textureArraySize = 1;

config2.textureFormat = varjo_DepthTextureFormat_D32_FLOAT;

config2.textureWidth = getTotalWidth(viewports);

config2.textureHeight = getTotalHeight(viewports);

varjo_SwapChain* swapchain = varjo_D3D11CreateSwapChain(session, renderer.GetD3DDevice(), &config2);

All current depth formats are supported by both DirectX 11 and OpenGL, so the format support does not need to be separately queried.

Note: If the application cannot render into a swap chain depth texture, the best performance can be achieved by creating varjo_DepthTextureFormat_D32_FLOAT swapchain and copying depth data with a simple shader. Otherwise, this conversion will be performed by runtime due to limitations in DirectX 11 resource sharing.

Submitting depth buffer

Submitting depth buffer can be done by adding pointer to varjo_ViewExtensionDepth for each varjo_LayerMultiProjView.

It is a common practice to setup these structures once outside the rendering loop.

The following example shows how a (forward) depth buffer can be submitted. In this example, the depth buffer contains values from 0.0 to 1.0, and near and far planes were set to 0.1m and 300m, respectively.

std::vector<varjo_ViewExtensionDepth> extDepthViews;

extDepthViews.resize(varjo_GetViewCount(session));

for (int i = 0; i < varjo_GetViewCount(session); i++) {

extDepthViews[i].header.type = varjo_ViewExtensionDepthType;

extDepthViews[i].header.next = nullptr;

extDepthViews[i].minDepth = 0.0;

extDepthViews[i].maxDepth = 1.0;

extDepthViews[i].nearZ = 0.1;

extDepthViews[i].farZ = 300;

}

std::vector<varjo_LayerMultiProjView> multiprojectionViews;

multiprojectionViews.resize(varjo_GetViewCount(session));

for (int i = 0; i < varjo_GetViewCount(session); i++) {

...

multiprojectionViews[i].extension = &extDepthViews[i].header;

}

Shutdown

The following functions should be used to uninitialize the allocated data structures.

varjo_FreeFrameInfo(frameInfo);

varjo_FreeSwapChain(swapchain);

varjo_SessionShutDown(session);