Achieving performance with high resolution rendering

Note: you are currently viewing documentation for a beta or an older version of Varjo

Table of Contents

- Introduction to Varjo’s high resolution headsets

- Different render modes selectable by the engine: How to select the right one?

- User settings that affect rendering in Varjo Base

- Unity-specific topics

- Unreal-specific topics

- OpenXR-specific topics

- Varjo Native topics

Introduction to Varjo’s high resolution headsets

Varjo’s hardware is capable of displaying much higher resolution than other headsets. Filling the display pixels at optimal quality would require massive rendering resolutions and affect performance. This is why Varjo provides different API’s that are meant to help engines succeed in maintaining a high framerate while still achieving high resolution. This document gives an overview of the different ways of achieving a great balance of framerate, performance, and resolution with Varjo headsets.

Varjo’s APIs for achieving high resolution is only available for OpenXR applications implementing Varjo-specific extensions and Varjo native apps. OpenVR applications and OpenXR applications that don’t use the provided Varjo’s extensions will use stereo rendering.

Resolutions on Varjo headsets

Varjo XR-4 and Aero have two displays, while Varjo XR-3 and VR-3 have four displays (2 context displays and 2 focus displays). All devices can, however, benefit of using foveated rendering. XR functions use some additional GPU resources for the compositor, and given that XR-system also locks framerates between the VR and the XR-camera feed, this may lead to VR content may sometimes appear somewhat different when XR-functions are turned on, so the XR-functions should only be turned on when they are actually used.

The actual resolution rendered to the headset is dependent on the selected settings in Varjo Base for “Resolution Quality”. The resolutions are also dependant on the render mode that the application is using (see below). The default setting in Varjo Base is “High”, and it is recommended for most cases. The perceived difference between “High” and “Highest” is small, but can be useful if your app doesn’t support anti-aliasing. It will, however, affect the framerate. For the exact numbers of the various resolutions that our headsets are rendering, see the tables under the heading “Resolution Quality“.

Different render modes selectable by the engine: How to select the right one?

Engines have a few different modes which can be used to achieve good balance between performance and resolution. They can be combined, and used together or separately.

View mode

- Quad view

- Stereo view

Foveation

- Static / Fixed foveation

- Gaze-based foveation

Pixel fill

- VRS

- No VRS, standard rasterisation

Quad view / Foveation / Fixed foveation

Quad view is a technology that reduces the number of pixels that an engine needs to render by rendering some parts of the display in higher resolution than other parts of the display. It lets the engine render a total of four views. 2 focal views, and 2 peripheral views. The focal- and peripheral views have different resolutions.

This means that one area per eye covers the full field of view in ”normal” resolution (peripheral view) and one smaller area provides a higher resolution view (foveal view). Using Varjo’s high-speed eye tracking, the foveal view follows the user’s gaze so that the user has high resolution wherever they look. This is what we call foveated rendering, and it is the optimal way to achieve high resolution with a minimal number of pixels rendered.

The engine can also choose to keep the foveal view locked to the viewport center. On XR-3 and VR-3 this is same as focus display position. This is called ”fixed foveation”, and it can sometimes be preferable if foveation is not possible, or it creates artefacts.

The quad view is independent of the hardware displays in XR-3 and VR-3, and it is, as such, also useful for the two-display setup of XR-4 and Aero headsets. For XR-4 quad view is especially important as the high total amount of pixels on the display makes stereo view mode really resource intensive to run.

Quad view and Performance

If the engine you are using doesn’t support multi-view rendering (up to 4 views in one draw call), it may have an effect on your framerate. The ideal way to solve this is to develop support for multi-view rendering.

Quad view and blend artefacts

If the rendered image in the foveal view is not exactly the same as the corresponding area in the peripheral view, the blending may cause artefacts near the edges of the window for the focal view. This can be more or less visible as a square. If foveation is turned on, the square will move with your eyes. If it is turned off, it will be static on the same position as the focus display. This can happen in the following scenarios:

-

If your engine is using different Level of Details (LODs) for different viewports (platform-specific details, see below).

-

Your scene is using certain effects, such as screen-space or temporal effects, which create different result based on the pixel density (see details per engine below).

-

Your engine is using certain anti-aliasing techniques (such as TAA). This is, however, a very minor artefact.

Quad view and culling

If your engine uses culling, the quad view may cause artefacts on the edges of the display.

Stereo view

Stereo view is the most common way for rendering a VR application. Running Stereo view on a Varjo headset means that the application will only submit two peripheral views and will thus skip the foveal view submission, which means that the same resolution needs to be used throughout the displays. This also means that the full resolution of the Varjo headset displays will not be used given that the recommended resolutions are lower than what the display can do. Generally you will have better performance and image quality when quad view is used instead of stereo view. Still, the resolution in stereo view might be good enough if quad-view is not possible due to, for example, engine limitations or blend artefacts. It is, for example, possible to achieve good framerate with Stereo view in Aero native resolution. For XR-4 spesifically, Stereo view is not recommended as it will either have noticably lower resolution than quad view or extremely high performance overhead because of all the pixels that need to be rendered on the viewport.

Variable rate shading

Variable Rate Shading, VRS, is a rendering technique introduced in the RTX series of GPUs, which allows for reducing the shading rate with no or little degradation in the perceived image quality. The hardware feature which also requires multisampling to be enabled, actually moves in the other direction of multisampling, where a single shading is broadcasted to a group of nearby pixels, for instance 1x2, 2x2 and up to 4x4 thereby reducing the shading rate, potentially increasing the rendering performance.

VRS comes with two levels of support, namely tier 1 or tier 2. For our scenario, we describe only tier 2 which is a superset of tier 1 functionality which opportunities for great performance.

The main idea is that the application can construct a VRS map which maps out regions on the display where the shading should be fine or coarse. This can be done just based on the resolution of the displays and optics, resolution of the displays and optics and the content on the scene, or it can go a step further and shade even more aggressively in the regions where the user’s eye gaze is not currently focused (this is called eye tracked foveated rendering). When these VRS maps are used in the swapchain, the GPU will take care of reducing the shader load where the coarser intensity is specified.

User settings that affect rendering in Varjo Base

Simple render

Simple render is a mode spesific to XR-3 and VR-3 headsets. Other Varjo headsets do need it as they only have two displays, one per eye.

XR-3 and VR-3 users can choose to run their devices in “simple render” mode. This disables the focus display and makes the headset behave similar to a headset with two displays, and therefore the rendering parameters will be the same as the Aero headset. This frees up some resources for Varjo compositor.

Simple render will have an effect on rendering resolutions since the engine does no longer need to render for the focus display, but this does however NOT mean that quad view is turned off. So it is worth noting that Simple render does not automatically result in Stereo render.

The simple render mode can currently only be controlled by the end user through the setting in Varjo Base, which means it is currently not possible for the engine to control this setting.

The simple render mode can be useful for avoiding some artefacts connected to the blending of the context display and focus display.

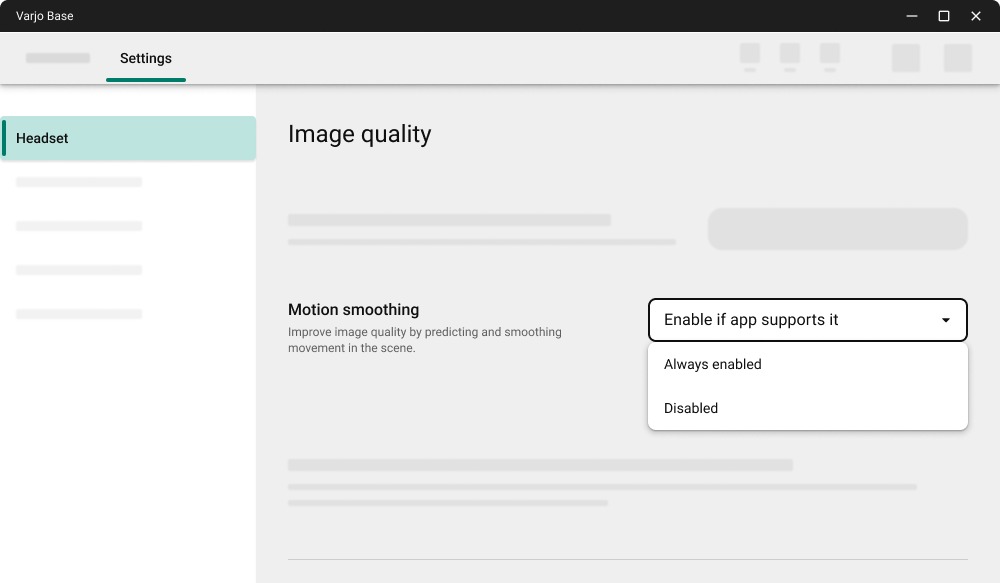

Motion smoothing

Motion smoothing predicts upcoming motion and is useful if the application is not able to reach 90 fps, as it will insert additional frames in real time, imitating a high-frame-rate experience. There is, however, neither quality nor performance impact having this function turned on if the application is able to run in 90 fps. If the application however can not reach 90fps, it will be down-clocked to 45 fps, resulting in each second frame real, and each second frame synthesised. If the application runs below 45 fps it will downclocked further synthesizing two out of three frames. If the application is running under 30 fps, motion smoothing tries to predict three frames resulting in suboptimal experience. Applications should aim to render at least 30 fps when this option is used.

In order to predict the frames, motion smoothing uses velocity vectors either provided by the application or generated automatically by Varjo Compositor based on content. We recommend application developers to provide motions vectors for Varjo compositor. If motion vectors are not provided by the application, they are generated from RGB images of the previous frames. This process takes computation time resulting in reduced base performance when Motion smoothing is enabled.

Motion smoothing requires Vertical synchronisation to be on.

-

Enabled if app supports it If an application provides motion vectors, Motion smoothing is used. Otherwise it is off.

-

Always enabled If an application provides motion vectors, Motion smoothing is used. Otherwise they are generated.

-

Disabled Motion smoothing is not used.

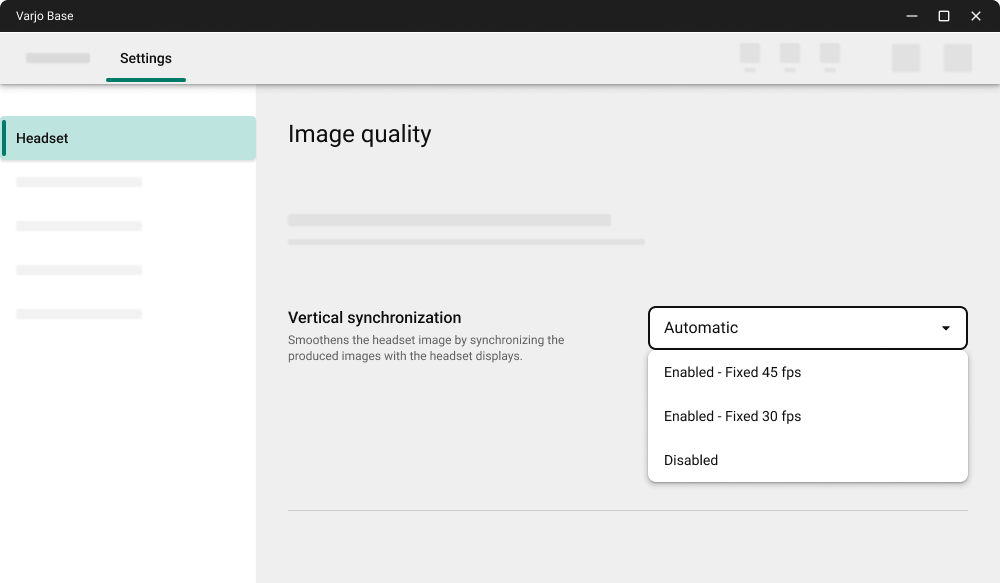

Vertical synchronisation / Framerate lock

Vertical synchronisation, also known as VSync, makes sure that the frames rendered on the headset are complete before they are displayed. This eliminates any vertical tearing artefacts from the displayed image.

VSync requires locking the framerate to a multiplier of the display’s refresh rate. The framerate always locks to lower the bound of the working motion prediction. When the option is used 45-89 fps locks to 45 fps, and 30-44 fps locks to 30 fps.

When disabled, VSync doesn’t allow the framerate to be higher than 90 fps to ensure tearless frame delivery in high framerate cases.

Application can also add their own fps locks, but that is not in Varjo’s API. We recommend application developers to rely on fps lock in Varjo Base instead of making their own custom implementation.

Unity-specific topics

Choosing the rendering mode

By default Unity renders at instanced quad view, but a developer may choose to render at stereo, too. For stereo rendering, Varjo XR SDK plugin provides a setting called Stereo Rendering Mode.

Post processes

The effects that work with Varjo’s quad view are present in our Unity examples. You can copy the whole post process volume to your own scenes.

Choosing the resolution

Unity renders using the resolution and framerate set in Varjo Base. If you wish to modify the resolution directly in Unity you can apply a scaling factor to the resolution.

Unreal-specific topics

Choosing the rendering mode

To build your Unreal app for Varjo headset you need to use Varjo OpenXR plugin for Unreal. You can select between quad view and stereo view in plugin.

Blend artefacts in quad view

If you start developing an Unreal project from scratch, and enable OpenXR mode as outlined in the developer documentation, you may see artefacts such as:

Blend Artefacts causing a more or less visible square (as outlined above):

-

If the scene is using different Level of Details (LODs), this may result in blend artefacts associated with shadows, or with objects appearing when you get closer.

-

If the scene is using Screen Space Reflections you may experience blend artefacts in reflections.

-

Some transparent objects, especially atmosphere, clouds, or glass materials, may cause blend artefacts.

-

If auto exposure for Unreal’s cameras is set to ON, then you may see a blend artefact (usually a white square). This can be fixed by setting the auto exposure to manual and adjusting it accordingly.

Other considerations

-

Lumen doesn’t work with quad view and falls back to ISR.

-

In some cases Unreal’s debug text may appear on the focus display (this would be seen in the middle of the display). This can be disabled by CVar “DISABLEALLSCREENMESSAGES”.

OpenXR-specific topics

Choosing the render mode

Through OpenXR the developer can always choose to render to:

-

two viewports

-

four viewports (foveal view and peripheral view)

This is the quad view extension from Varjo that allows for fixed foveation and dynamic foveation.

The table below gives options for developers to improve the performance depending on whether they use stereo rendering or quad view.

| Stereo Render | Quad View | |

|---|---|---|

| Extensions required | None | Varjo quad view extension (XR_VARJO_quad_views), Varjo foveated rendering extension (XR_VARJO_foveated_rendering) |

| Automatic fixed foveation | Not available | Yes, requires XR_VARJO_quad_views to be enabled by the application. |

| Automatic dynamic floating window foveation | Not available | Yes, requires XR_VARJO_quad_views to be enabled by the application. |

| Variable shading rate foveation | Yes, this can be implemented by the application developer, but only for fixed foveation. Eye tracked foveation is not supported, but might be available in the future. | Yes, this can be implemented by the application developer orthogonally with any of the two modes above and requires XR_VARJO_foveated_rendering to be enabled by the application. |

OpenXR: Variable rate shading

In OpenXR the application can pick and choose between both graphics APIs and the render modes described earlier. The graphics API that Varjo currently supports are D3D11, D3D12, Vulkan and OpenGL. All of them have support for VRS but might require graphics extensions (in the case of Vulkan and OpenGL), or Nvidia APIs (in the case of D3D11).

If you choose to implement VRS that only take into account the content of the scene coupled with the resolution of the output and optics, then you just pick the right graphics API with right extension and you’re good to go.

On the other hand, if you also want to optimize for where the user’s eye gaze maps to the display, then your application needs access to the rendering gaze provided by Varjo’s eye tracker. This is done by enabling both the XR_VARJO_quad_views and XR_VARJO_foveated_rendering extensions and grab the rendering gaze out of the API.

This table shows the requirements for dynamic eye tracked foveated rendering with VRS.

| GraphicsAPI | Support | OpenXR extensions |

|---|---|---|

| D3D11 | With NVAPI | XR_VARJO_quad_views + XR_VARJO_foveated_rendering. |

| D3D12 | Up to 4x4 shading rate with standard DirectX with multiple viewport support. | XR_VARJO_quad_views + XR_VARJO_foveated_rendering. |

| Vulkan | Yes, with VK_KHR_fragment_shading_rate extension supported today by Nvidia. Can be improved with VK_KHR_fragment_shading_rate2 not yet supported by Nvidia. Other extensions exist for foveation on mobile platforms Android / Qualcomm. |

XR_VARJO_quad_views + XR_VARJO_foveated_rendering. OpenXR extensions with dependency on Vulkan exist, but there’s no Windows HMD today that supports it. |

| OpenGL | Yes, with GL_NV_shading_rate_image extension. | XR_VARJO_quad_views + XR_VARJO_foveated_rendering |

OpenXR developer work

- Compute projection of foveated gaze to viewport.

- Construct VRS maps.

- Adjust rendering to mitigate visual artefacts.

References to implementation tips for VRS rendering can be found in Dynamic foveated variable shader rate with openxr toolkit and aero blog post.

Varjo Native topics

Variable rate shading

Graphics requirements are the same as in the above OpenXR table. For implementation details, see the documentation.