Depth occlusion

Note: you are currently viewing documentation for a beta or an older version of Varjo

Depth occlusion allows you to composite and sort real and virtual worlds together by utilizing the depth sensing capabilities of XR-3 and XR-1 Developer Edition. This allows you to bring your real hands into the virtual world or, in the case of XR-3, even other people’s hands.

Follow the steps below to enable depth occlusion for your project.

Using depth occlusion

Enable the mixed reality cameras as described in Mixed Reality with Unreal. Depth occlusion doesn’t require you to use custom post processing, it’s enough to have Alpha Blend set as the Preferred Environment Blend Mode.

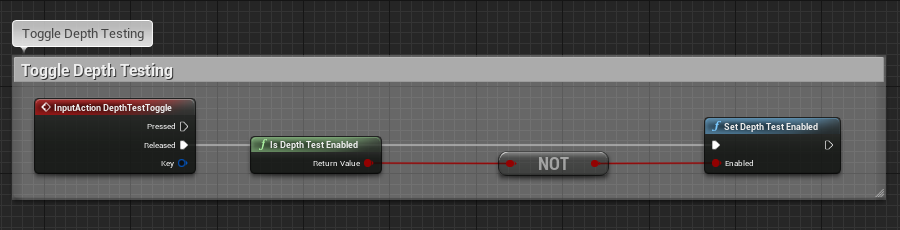

To be able to do depth occlusion, you first have to enable depth testing. This can be done with Set Depth Test Enabled blueprint node.

Is Depth Test Enabled returns the current state of depth testing.

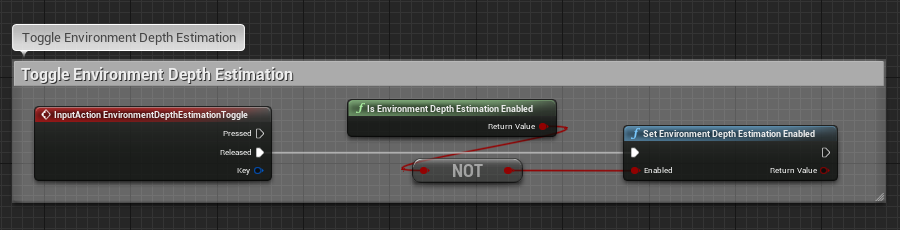

To enabled depth occlusion with real world, enable environment depth estimation with Set Environment Depth Estimation Enabled node.

Is Environment Depth Estimation Enabled returns the current state.

To enable or disable depth estimation, call the respective methods in the VarjoMixedReality class.

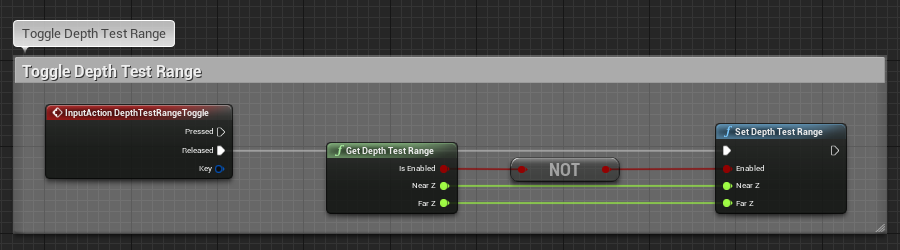

The default depth test range is 0.0 - 0.75 m, which is typically good for hand occlusion. For finer control over the range included in depth testing, you can use Set Depth Test Range blueprint node.

The current state of the depth test range can be checked with Get Depth Test Range.