OpenXR

Note: you are currently viewing documentation for a beta or an older version of Varjo

OpenXR is an open, royalty-free standard used by virtual reality and augmented reality platforms and devices. OpenXR is developed by a working group managed by the Khronos Group consortium. Varjo is an active contributing member in OpenXR standardization. Varjo headsets fully support OpenXR and can run your OpenXR applications.

Developing OpenXR applications with Varjo

Supported extensions

- XR_KHR_D3D11_enable, extension version 5

- XR_KHR_opengl_enable, extension version 9

- XR_KHR_win32_convert_performance_counter_time, extension version 1

- XR_KHR_composition_layer_depth, extension version 5

- XR_EXT_eye_gaze_interaction, extension version 1

- XR_VARJO_composition_layer_depth_test, extension version 1

- XR_VARJO_environment_depth_estimation, extension version 1

- XR_VARJO_quad_views, extension version 1

- XR_VARJO_foveated_rendering, extension version 1

- XR_EXT_debug_utils, extension version 3

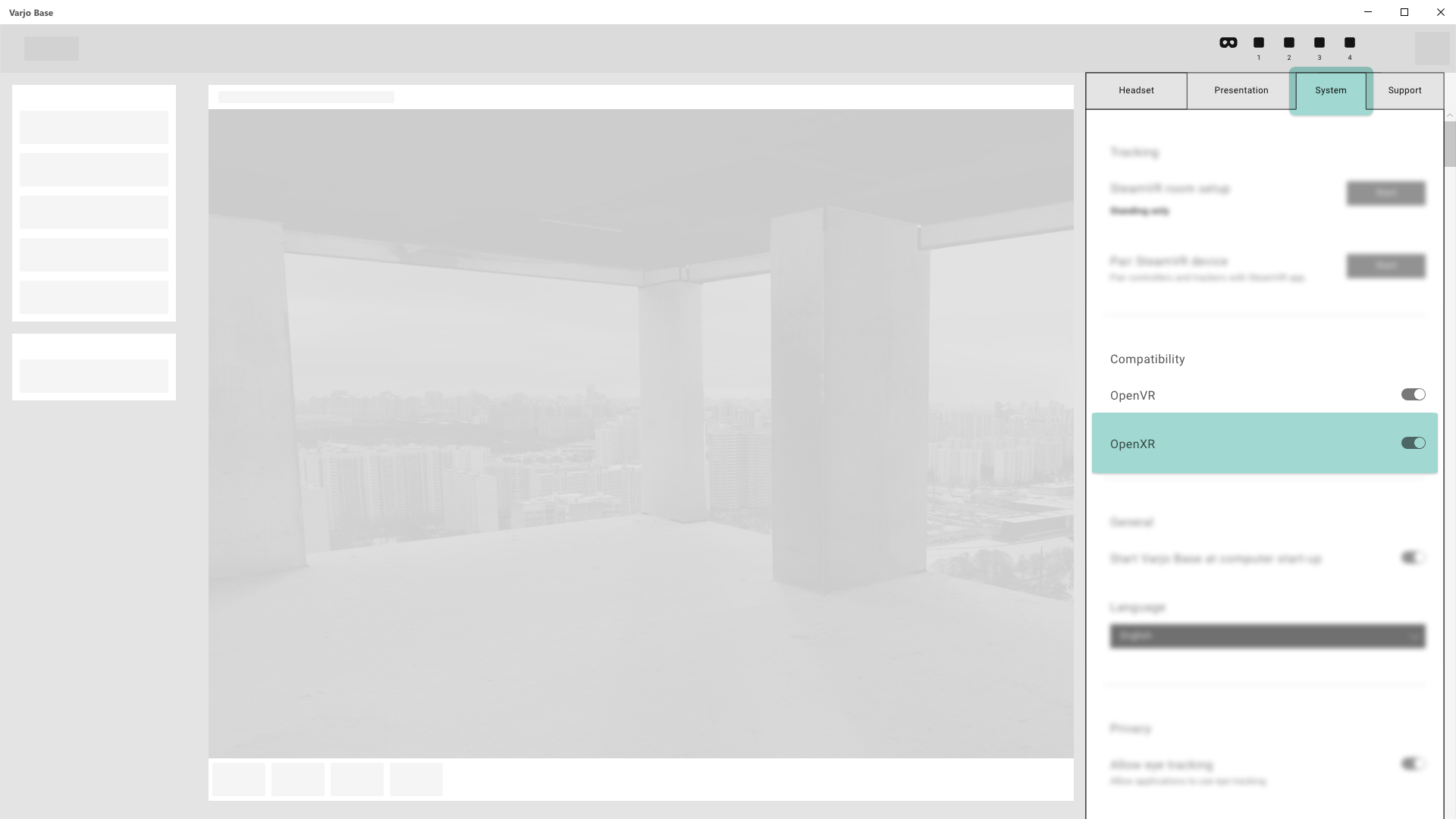

Enable OpenXR Compatibility

You can develop applications for VR-3, VR-2 Pro, VR-2, and VR-1 using the OpenXR API as you would for any other VR device. To use OpenXR on a Varjo headset, simply make sure that OpenXR is enabled in Varjo Base in the System tab under Compatibility (enabled by default). You can use either Vive or Index controllers with any Varjo device through OpenXR.

Download OpenXR sample application

You can download a sample OpenXR application that shows how to use Varjo extensions.

Human eye resolution

To take advantage of Varjo’s human eye resolution displays, use the XR_VARJO_quad_views extension described in the official OpenXR documentation. This allows you to have two viewports per eye like in the Analytics window in Varjo Base.

Like all extensions in OpenXR, XR_VARJO_quad_views must be enabled first. If the extension is not enabled, the runtime will not return XR_VIEW_CONFIGURATION_TYPE_PRIMARY_QUAD_VARJO as a view configuration and will return an error when the application tries to pass it to functions.

When an application enumerates view configurations, Varjo OpenXR runtime will return XR_VIEW_CONFIGURATION_TYPE_PRIMARY_QUAD_VARJO first:

// xrEnumerateViewConfigurations

uint32_t viewConfigTypeCount;

XRCHECK(xrEnumerateViewConfigurations(instance, systemId, 0, &viewConfigTypeCount, nullptr));

std::vector<XrViewConfigurationType> viewConfigTypes(viewConfigTypeCount);

XRCHECK(xrEnumerateViewConfigurations(instance, systemId, viewConfigTypeCount, &viewConfigTypeCount, viewConfigTypes.data()));

XrViewConfigurationType viewConfigType = viewConfigTypes[0]; // will contain XR_VIEW_CONFIGURATION_TYPE_PRIMARY_QUAD_VARJO

If the application does not hard code 2 as a view count, it should work without any further modifications.

For example, to find out how many views there are in a given view configuration, do the following:

uint32_t viewCount;

XRCHECK(xrEnumerateViewConfigurationViews(instance, systemId, viewConfigType, 0, &viewCount, nullptr));

std::vector<XrViewConfigurationView> configViews(viewCount, {XR_TYPE_VIEW_CONFIGURATION_VIEW});

XRCHECK(xrEnumerateViewConfigurationViews(instance, systemId, viewConfigType, viewCount, &viewCount, configViews.data()));

viewCount will contain 4 and configViews will contain descriptions for all views. The first two views are context, which has a lower dpi, while the third and fourth views are focus, which has a high dpi.

Mixed reality support

Enable mixed reality

For XR-3 and XR-1 Developer Edition, you can enable mixed reality by enabling blend mode. This can be done with the XR_ENVIRONMENT_BLEND_MODE_ALPHA_BLEND flag. Detailed specifications can be found in the OpenXR specifications.

XrFrameEndInfo frameEndInfo{XR_TYPE_FRAME_END_INFO};

frameEndInfo.displayTime = frameState.predictedDisplayTime;

frameEndInfo.environmentBlendMode = mrEnabled ? XR_ENVIRONMENT_BLEND_MODE_ALPHA_BLEND : XR_ENVIRONMENT_BLEND_MODE_OPAQUE;

frameEndInfo.layerCount = 1;

frameEndInfo.layers = layers;

Toggle depth estimation

Once the mixed reality cameras are enabled, Varjo runtime can estimate depth for incoming video frames. A typical use case is hand occlusion. To use depth estimation, enable the XR_VARJO_environment_depth_estimation extension and call the following function:

xrSetEnvironmentDepthEstimationVARJO(session, XR_TRUE);

Depth estimation requires that the application submit a depth buffer as well. More information about this extension is available in the OpenXR specification.

Limit depth testing range

In most cases, mixing real world and VR content should happen in a limited range. For example, an application

may want to show hands on top of VR content but does not want to show the surrounding walls. In this case XR_VARJO_composition_layer_depth_test can be used to enable depth testing only on a certain range.

The following example is taken from the specification and enables depth testing at a distance of 0 to 1 meter:

XrCompositionLayerProjection layer{XR_TYPE_COMPOSITION_LAYER_PROJECTION};

layer.space = ...;

layer.viewCount = ...;

layer.views = ...;

layer.layerFlags = ...;

XrCompositionLayerDepthTestVARJO depthTest{XR_TYPE_COMPOSITION_LAYER_DEPTH_TEST_VARJO, layer.next};

depthTest.depthTestRangeNearZ = 0.0f; // in meters

depthTest.depthTestRangeFarZ = 1.0f; // in meters

layer.next = &depthTest

In this example, from 0 to 1 meter the compositor will take depth into account from both the video layer and the application layer. From 1 meter on, it will ignore depth completely and VR content will overwrite pixels from the video stream.

Eye tracking with OpenXR

All Varjo headsets support eye tracking. The Varjo OpenXR runtime fully implements the XR_EXT_eye_gaze_interaction extension. See the official specification for details.

Foveated rendering

With the high-resolution displays in the Varjo XR-3 headset, an application would need to render frames with more than 20 megapixels at 90 frames per second to achieve the best possible image quality. This kind of performance is very difficult to achieve.

To compensate for this, an application could lower the texture resolution at the cost of image quality.

A better solution is to use foveated rendering to track the user’s gaze and render content in the highest resolution only where the user is looking. Varjo has designed an OpenXR extension that makes it easy to implement foveated rendering. See the official specification for an example and a detailed explanation of the main concepts related to foveated rendering.

OpenXR is a new and evolving standard. Please check the official OpenXR specifications for any recent changes.